Moving on to the second part where I am going to cover a few of the common questions and misunderstanding around the different load methods in FDMEE/Data Management, mainly concentrating on the “All data types” method.

The different load methods can be defined at application or data load rule level, for EPM cloud the methods currently available are:

Unless you have a specific requirement to load non-numeric data it is best to just stick with the “Numeric Data Only” method, though I have seen numerous posts in the Oracle forums where numeric data was being loaded but one of the all data type methods had been selected, this can cause problems due to the way the all data loads operate which I will get on to later.

If you are not aware, the numeric data only method loads data behind the scenes using an Essbase data load rule, for the cloud a file is created during the export stage, an Essbase load rule is created and the data is loaded using the file and rule.

The all data type method uses the outline load utility which you may be familiar with if you have been involved with on-premise planning, data is loaded directly through the planning layer instead of Essbase which allows non-numeric data to be loaded.

I have already covered in detail in previous blog posts the different all data type load methods, the “All data types with auto-increment of line item” used to be named “All data types in Planning File Format” and changed name without warning, this is the cloud remember.

You can read the two posts I put together about this method here and here, the posts were put together when the old naming convention was still in use, this should not be a problem as the functionality is exactly the same, just the name changed.

I also put together a post around loading non-numeric data with the “All data types with security” which you can read about here.

To complicate matters further when loading data using this method as a non-administrator then the user’s security is enforced and a REST API comes into play instead of the OLU, luckily I put together three posts which try to explain how the security operates with cloud and on-premise for both Planning and Essbase, to start to understand the security mystery then go here.

If you compare the load options currently available in on-premise FDMEE to the cloud then you will see they differ.

Before 11.1.2.4.220

From 11.1.2.4.220

The numeric loads can be defined to load data using either a file based Essbase data load rule which is the same as the cloud, alternatively you select to load using an Essbase SQL based load rule which will load the data directly from the FDMEE database repository.

There are two available all data type load methods like the cloud except the security option is not available.

Back to EPM cloud, there are a few strange functionality quirks when selecting one of the all data type load methods, these may well be bugs and will go away over time but at the moment some of the things to watch are for are.

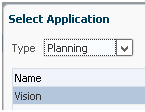

If you set one of the all data type load methods at target application level:

When you create a load rule, the rule will default to an all data type method and there is no option to override with “Numeric Data Only”.

So best to keep the target application load method to the default value of “Numeric Data Only” as this way it is possible to select any of the methods when defining a load rule.

Another strange one is if you have a load rule set to a load method of “Numeric Data Only”

Then change to one of the all data type methods.

It is not possible to switch it back to numeric data only, the only values available are the all data type load methods.

If you try and enter “Numeric Data Only” manually and save then you get hit with an error.

If you try and use migration to import the rules back in it doesn’t seem to overwrite the property, yes you can delete the rule and recreate but remember if you try and delete the rule and data has already been loaded, then the data could be deleted.

I do consider them to be bugs and if they are ever addressed and fixed I will update this post.

Right, on to an issue that I have seen raised a few times which is usually down to load method selected:

“I am selecting the export method of “Add Data” but the data is being replaced in the target application”

To understand the problem, I think it is worth going through some examples of how the functionality works between numeric and all data type load methods.

To start with let us take a numeric type load with a simple source file that contains one row of data.

The import format was set to type “Delimited – Numeric Data” and the rule load method defined as “Numeric Data Only”.

The data was into imported and validated in the workbench.

To load the data to the target application there are four export modes available.

The documentation provides a clear definition of what these options do, what it doesn’t mention is that these relate to the numeric data only load method.

Store Data — Inserts the value from the source or file into the target application, replacing any value that currently exists.

Replace Data — Clears all data for the POV in the target, and then loads from the source or file. For example, when you have a year of data in your Planning application but are only loading a single month, this option clears the entire year before performing the load.

Add Data — Adds the value from the source or file to the value in the target application. For example, when you have 100 in the source, and 200 in the target, then the result is 300.

Subtract Data — Subtracts the value in the source or file from the value in the target application. For example, when you have 300 in the target, and 100 in the source, then the result is 200.

Before loading the data, I created a form to match the POV and entered a value.

The data was then loaded and the export mode selected was “Add Data”

As you would expect the data value loaded was added to the existing data.

In the process logs you can see that an export file and Essbase data load rule are created.

INFO [AIF]: Creating data file: /u03/inbox/outbox/Vision_1448.dat

INFO [AIF]: Data file creation completed: 1

DEBUG [AIF]: Created rule file: AIF0286

INFO [AIF]: Saved rule file to essbase server

The data is then loaded directly to Essbase using the data file and load rule.

If you have worked with Essbase you will know that export mode options for replace, add and subtract match to those that can be set in an Essbase load data load rule.

If I run an export again and change the mode to “Store Data”.

This time the data in the target application will be replaced with that from the data load.

All makes perfect sense, so now let us switch over to an all a data type load, the import format was updated to a type of “Delimited – All Data Type” and the load method in the rule set to “All data types with security”.

The data value in the target application was set back to 500.

The data is reloaded into the workbench and the export mode set to “Add Data”, this is done with an administrator account which means the load should be carried out by the OLU.

The process logs highlight the load method is now using the outline load utility (OLU).

DEBUG [AIF]: Overrode info.loadMethod for the admin user: OLU

Outline data store load process finished. 1 data record was read, 2 data records were processed, 2 were accepted for loading (verify actual load with Essbase log files), 0 were rejected.

Reloading the planning form shows that the data has been replaced instead of added to.

The reason for this is that is the way the OLU operates and to be honest the all data type is really aimed at loading non-numeric data such as text, dates or smart lists, if you think about it, it just wouldn’t make sense trying to add non-numeric data.

How about if the process is repeated but this time with a non-administrator account, this should mean the REST API will be the method for loading the data (currently only EPM cloud supports this).

Run the export again with export mode set to “Add Data”.

In the process log you can see that because it is all data type load and a non-admin user the load is carried out with the REST API, if you want to understand more about this method and its limitations then refer to my “diving into the Essbase and Planning security mystery” posts.

DEBUG [AIF]: LOAD_METHOD:HPL, LOAD_TYPE:DATA, EXPORT_MODE:ADD_DATA

DEBUG [AIF]: Overrode info.loadMethod for the non-admin user: REST

DEBUG [AIF]: No need to connect to Essbase server.

DEBUG [AIF]: requestUrl: http://localhost:9000/HyperionPlanning/rest/v3/applications/Vision/plantypes/Plan1/importdataslice

INFO [AIF]: Number of rows loaded: 1, Number of rows rejected: 0

Refreshing the data form confirms the data has been added to the existing value.

With the REST API method, Data Management will generate a file containing JSON that is read and passed as the payload when calling the REST resource.

An example of the JSON created to load the data above is:

{

"aggregateEssbaseData":true,

"dateFormat":"MM-DD-YYYY",

"cellNotesOption":"Skip",

"dataGrid":{

"columns":[

[

"Actual"

]

],

"rows":[

{

"headers":[

"1110",

"110",

"Working",

"BaseData",

"P_000",

"FY17",

"Nov"

],

"data":[

"400"

]

}

]

}

}

The REST resource has a parameter called “aggregateEssbaseData”, the value is set to true when the export mode of “Add Data” is selected, if “Store Data” is selected then value is set as false.

The documentation on the REST API has some important information to consider when loading text and numeric data together using this method.

“aggregateEssbaseData which has a true or false value. If true, the values being saved will be added to the existing values. Only numeric values can be aggregated. Cells with Smart list, Text and Date data types will be rejected. If false, the data values will be overwritten.”

If you set the export mode to add data and there is a mixture of numeric and non-numeric data the non-numeric should be rejected, to prove this I added a row to the source to load text to a text type member.

If there is no existing data for the text member then the export is successful, if there is existing data then the export fails.

The process log confirms the numeric data was loaded and the text data was rejected.

INFO [AIF]: Number of rows loaded: 1, Number of rows rejected: 1

INFO [AIF]: List of rejected cells: ["[Actual, Text Example, 110, Working, BaseData, P_000, FY17, Nov]"]

ERROR [AIF]: The data load process has failed.

So if you are loading numeric data that needs to be added to existing data then carefully consider the outcome when selecting a load method.

There are also differences between the load methods when loading multiple records against the same POV, I will go through an example to demonstrate this.

This time I have added an additional row to the source file which is against the same member combination as the previous row.

The load method has been set to “Numeric Data Only”

The data is summed before it is loaded to the target application which you can see by switching the view in the UI to target.

The data export file that is created before being loaded to the target application also confirms this.

As expected the data in the target application is the same as the “Store Data” option was selected.

If we repeat the process using the “All data types with security” load method with an admin user then it acts differently.

Checking the form after a successful load reveals that only one data value has been loaded.

The data export file contains only one value.

This is because when using the all data type method, instead of the values being summed the maximum value is loaded to the target and replaces any existing value.

If the process is repeated using a non-admin account so the load method switches to using the REST API the functionality acts in the same way and the maximum value will be loaded.

Another reason to stick with the “Numeric Data Only” load method when loading numerical data.

On to the final question which is:

“Is mapping data values possible with the all data type?”

Currently it is not possible with on-premise though I am sure that will change in a future patch, it wasn’t possible in EPM Cloud until a while back when data dimension appeared from nowhere in the usual cloud type way, I don’t remember reading about it in the monthly update documentation.

If you look at the available dimensions in cloud there is a “Data” dimension available.

The data dimension is visible whether it is a numeric data only load or all data type load but it only relates to the latter, if you do try to use it for numeric data only loads then it will be ignored.

Update: Available in on-premise FDMEE from 11.1.2.4.220

It can also be ignored for all data type loads if there are no mappings required, if there are no mappings applied it will map the source to the target automatically.

There nothing that different about using the data dimension for mappings but it is worth just putting a quick demonstration of it in action.

I am going to through a simple example of loading Smart List data, I created a new Smart List based on members in a hierarchy.

The name and are automatically generated, the name is created by prefixing an underscore to the original member name and the label is a combination of member name and alias.

The Smart List was applied against two account members and a form created to check the output from the data management load.

The source file is extremely basic with the two account members and data value for the Smart List.

The import format was set as a file type of “Delimited – All Data Type”.

The data load rule load method was defined as “All data types with security”.

For this first example, no mappings were added to the data dimension.

The process log shows the data was loaded successfully as there were no rejections:

Outline data store load process finished. 2 data records were read, 3 data records were processed, 3 were accepted for loading (verify actual load with Essbase log files), 0 were rejected.

The data form shows that the values have been loaded though they don’t match any of the Smart List entries because they have not been mapped correctly.

In the above example, the values loaded are numeric so they still get loaded to Essbase even if they are mapped incorrectly, if you try to load invalid Smart List entries with text data like:

I cleared out the data entries in the form and ran the export again.

The load looks to be successful from the log as it records that data was loaded:

Outline data store load process finished. 2 data records were read, 3 data records were processed, 3 were accepted for loading (verify actual load with Essbase log files), 0 were rejected.

In reality, no data will have been loaded as it did not match any Smart List entries which means it will have no numerical ID associated with it to load to Essbase.

Moving on, back to the original source file but this time I will add a mapping to the data dimension.

I have used the format function to prefix the source value with an underscore so it matches the format for the name of the Smart List entry.

Refreshing the form shows that the data has been mapped correctly this time as the Smart List entries are displayed with their associated label.

It is also possible to map the data to the Smart List label instead of the name.

The data will be mapped successfully in the target application with either the name or the label of the Smart List entry.

I think that covers as much as I need to on all data type mappings as they operate in pretty much the same way as standard dimension mappings.

As always I hope you found this post useful, until next time..

The different load methods can be defined at application or data load rule level, for EPM cloud the methods currently available are:

Unless you have a specific requirement to load non-numeric data it is best to just stick with the “Numeric Data Only” method, though I have seen numerous posts in the Oracle forums where numeric data was being loaded but one of the all data type methods had been selected, this can cause problems due to the way the all data loads operate which I will get on to later.

If you are not aware, the numeric data only method loads data behind the scenes using an Essbase data load rule, for the cloud a file is created during the export stage, an Essbase load rule is created and the data is loaded using the file and rule.

The all data type method uses the outline load utility which you may be familiar with if you have been involved with on-premise planning, data is loaded directly through the planning layer instead of Essbase which allows non-numeric data to be loaded.

I have already covered in detail in previous blog posts the different all data type load methods, the “All data types with auto-increment of line item” used to be named “All data types in Planning File Format” and changed name without warning, this is the cloud remember.

You can read the two posts I put together about this method here and here, the posts were put together when the old naming convention was still in use, this should not be a problem as the functionality is exactly the same, just the name changed.

I also put together a post around loading non-numeric data with the “All data types with security” which you can read about here.

To complicate matters further when loading data using this method as a non-administrator then the user’s security is enforced and a REST API comes into play instead of the OLU, luckily I put together three posts which try to explain how the security operates with cloud and on-premise for both Planning and Essbase, to start to understand the security mystery then go here.

If you compare the load options currently available in on-premise FDMEE to the cloud then you will see they differ.

Before 11.1.2.4.220

The numeric loads can be defined to load data using either a file based Essbase data load rule which is the same as the cloud, alternatively you select to load using an Essbase SQL based load rule which will load the data directly from the FDMEE database repository.

There are two available all data type load methods like the cloud except the security option is not available.

Back to EPM cloud, there are a few strange functionality quirks when selecting one of the all data type load methods, these may well be bugs and will go away over time but at the moment some of the things to watch are for are.

If you set one of the all data type load methods at target application level:

When you create a load rule, the rule will default to an all data type method and there is no option to override with “Numeric Data Only”.

So best to keep the target application load method to the default value of “Numeric Data Only” as this way it is possible to select any of the methods when defining a load rule.

Another strange one is if you have a load rule set to a load method of “Numeric Data Only”

Then change to one of the all data type methods.

It is not possible to switch it back to numeric data only, the only values available are the all data type load methods.

If you try and enter “Numeric Data Only” manually and save then you get hit with an error.

If you try and use migration to import the rules back in it doesn’t seem to overwrite the property, yes you can delete the rule and recreate but remember if you try and delete the rule and data has already been loaded, then the data could be deleted.

I do consider them to be bugs and if they are ever addressed and fixed I will update this post.

Right, on to an issue that I have seen raised a few times which is usually down to load method selected:

“I am selecting the export method of “Add Data” but the data is being replaced in the target application”

To understand the problem, I think it is worth going through some examples of how the functionality works between numeric and all data type load methods.

To start with let us take a numeric type load with a simple source file that contains one row of data.

The import format was set to type “Delimited – Numeric Data” and the rule load method defined as “Numeric Data Only”.

The data was into imported and validated in the workbench.

To load the data to the target application there are four export modes available.

The documentation provides a clear definition of what these options do, what it doesn’t mention is that these relate to the numeric data only load method.

Store Data — Inserts the value from the source or file into the target application, replacing any value that currently exists.

Replace Data — Clears all data for the POV in the target, and then loads from the source or file. For example, when you have a year of data in your Planning application but are only loading a single month, this option clears the entire year before performing the load.

Add Data — Adds the value from the source or file to the value in the target application. For example, when you have 100 in the source, and 200 in the target, then the result is 300.

Subtract Data — Subtracts the value in the source or file from the value in the target application. For example, when you have 300 in the target, and 100 in the source, then the result is 200.

Before loading the data, I created a form to match the POV and entered a value.

The data was then loaded and the export mode selected was “Add Data”

As you would expect the data value loaded was added to the existing data.

In the process logs you can see that an export file and Essbase data load rule are created.

INFO [AIF]: Creating data file: /u03/inbox/outbox/Vision_1448.dat

INFO [AIF]: Data file creation completed: 1

DEBUG [AIF]: Created rule file: AIF0286

INFO [AIF]: Saved rule file to essbase server

The data is then loaded directly to Essbase using the data file and load rule.

If you have worked with Essbase you will know that export mode options for replace, add and subtract match to those that can be set in an Essbase load data load rule.

If I run an export again and change the mode to “Store Data”.

This time the data in the target application will be replaced with that from the data load.

All makes perfect sense, so now let us switch over to an all a data type load, the import format was updated to a type of “Delimited – All Data Type” and the load method in the rule set to “All data types with security”.

The data value in the target application was set back to 500.

The data is reloaded into the workbench and the export mode set to “Add Data”, this is done with an administrator account which means the load should be carried out by the OLU.

The process logs highlight the load method is now using the outline load utility (OLU).

DEBUG [AIF]: Overrode info.loadMethod for the admin user: OLU

Outline data store load process finished. 1 data record was read, 2 data records were processed, 2 were accepted for loading (verify actual load with Essbase log files), 0 were rejected.

Reloading the planning form shows that the data has been replaced instead of added to.

The reason for this is that is the way the OLU operates and to be honest the all data type is really aimed at loading non-numeric data such as text, dates or smart lists, if you think about it, it just wouldn’t make sense trying to add non-numeric data.

How about if the process is repeated but this time with a non-administrator account, this should mean the REST API will be the method for loading the data (currently only EPM cloud supports this).

Run the export again with export mode set to “Add Data”.

DEBUG [AIF]: LOAD_METHOD:HPL, LOAD_TYPE:DATA, EXPORT_MODE:ADD_DATA

DEBUG [AIF]: Overrode info.loadMethod for the non-admin user: REST

DEBUG [AIF]: No need to connect to Essbase server.

DEBUG [AIF]: requestUrl: http://localhost:9000/HyperionPlanning/rest/v3/applications/Vision/plantypes/Plan1/importdataslice

INFO [AIF]: Number of rows loaded: 1, Number of rows rejected: 0

Refreshing the data form confirms the data has been added to the existing value.

An example of the JSON created to load the data above is:

{

"aggregateEssbaseData":true,

"dateFormat":"MM-DD-YYYY",

"cellNotesOption":"Skip",

"dataGrid":{

"columns":[

[

"Actual"

]

],

"rows":[

{

"headers":[

"1110",

"110",

"Working",

"BaseData",

"P_000",

"FY17",

"Nov"

],

"data":[

"400"

]

}

]

}

}

The REST resource has a parameter called “aggregateEssbaseData”, the value is set to true when the export mode of “Add Data” is selected, if “Store Data” is selected then value is set as false.

The documentation on the REST API has some important information to consider when loading text and numeric data together using this method.

“aggregateEssbaseData which has a true or false value. If true, the values being saved will be added to the existing values. Only numeric values can be aggregated. Cells with Smart list, Text and Date data types will be rejected. If false, the data values will be overwritten.”

If you set the export mode to add data and there is a mixture of numeric and non-numeric data the non-numeric should be rejected, to prove this I added a row to the source to load text to a text type member.

The process log confirms the numeric data was loaded and the text data was rejected.

INFO [AIF]: Number of rows loaded: 1, Number of rows rejected: 1

INFO [AIF]: List of rejected cells: ["[Actual, Text Example, 110, Working, BaseData, P_000, FY17, Nov]"]

ERROR [AIF]: The data load process has failed.

So if you are loading numeric data that needs to be added to existing data then carefully consider the outcome when selecting a load method.

There are also differences between the load methods when loading multiple records against the same POV, I will go through an example to demonstrate this.

This time I have added an additional row to the source file which is against the same member combination as the previous row.

The load method has been set to “Numeric Data Only”

The data is summed before it is loaded to the target application which you can see by switching the view in the UI to target.

The data export file that is created before being loaded to the target application also confirms this.

As expected the data in the target application is the same as the “Store Data” option was selected.

If we repeat the process using the “All data types with security” load method with an admin user then it acts differently.

Checking the form after a successful load reveals that only one data value has been loaded.

The data export file contains only one value.

This is because when using the all data type method, instead of the values being summed the maximum value is loaded to the target and replaces any existing value.

If the process is repeated using a non-admin account so the load method switches to using the REST API the functionality acts in the same way and the maximum value will be loaded.

Another reason to stick with the “Numeric Data Only” load method when loading numerical data.

On to the final question which is:

“Is mapping data values possible with the all data type?”

Currently it is not possible with on-premise though I am sure that will change in a future patch, it wasn’t possible in EPM Cloud until a while back when data dimension appeared from nowhere in the usual cloud type way, I don’t remember reading about it in the monthly update documentation.

If you look at the available dimensions in cloud there is a “Data” dimension available.

The data dimension is visible whether it is a numeric data only load or all data type load but it only relates to the latter, if you do try to use it for numeric data only loads then it will be ignored.

Update: Available in on-premise FDMEE from 11.1.2.4.220

It can also be ignored for all data type loads if there are no mappings required, if there are no mappings applied it will map the source to the target automatically.

There nothing that different about using the data dimension for mappings but it is worth just putting a quick demonstration of it in action.

I am going to through a simple example of loading Smart List data, I created a new Smart List based on members in a hierarchy.

The name and are automatically generated, the name is created by prefixing an underscore to the original member name and the label is a combination of member name and alias.

The Smart List was applied against two account members and a form created to check the output from the data management load.

The source file is extremely basic with the two account members and data value for the Smart List.

The import format was set as a file type of “Delimited – All Data Type”.

The data load rule load method was defined as “All data types with security”.

For this first example, no mappings were added to the data dimension.

The process log shows the data was loaded successfully as there were no rejections:

Outline data store load process finished. 2 data records were read, 3 data records were processed, 3 were accepted for loading (verify actual load with Essbase log files), 0 were rejected.

The data form shows that the values have been loaded though they don’t match any of the Smart List entries because they have not been mapped correctly.

In the above example, the values loaded are numeric so they still get loaded to Essbase even if they are mapped incorrectly, if you try to load invalid Smart List entries with text data like:

I cleared out the data entries in the form and ran the export again.

The load looks to be successful from the log as it records that data was loaded:

Outline data store load process finished. 2 data records were read, 3 data records were processed, 3 were accepted for loading (verify actual load with Essbase log files), 0 were rejected.

In reality, no data will have been loaded as it did not match any Smart List entries which means it will have no numerical ID associated with it to load to Essbase.

Moving on, back to the original source file but this time I will add a mapping to the data dimension.

I have used the format function to prefix the source value with an underscore so it matches the format for the name of the Smart List entry.

Refreshing the form shows that the data has been mapped correctly this time as the Smart List entries are displayed with their associated label.

It is also possible to map the data to the Smart List label instead of the name.

The data will be mapped successfully in the target application with either the name or the label of the Smart List entry.

I think that covers as much as I need to on all data type mappings as they operate in pretty much the same way as standard dimension mappings.

As always I hope you found this post useful, until next time..